Davos 2026: Scaling Intelligence Faster Than Governance

26th Jan 2026 | Superintelligence Newsletter

Hey Superintelligence Fam 👋

This week AI moved from tools to systems as agents, memory, and governance collided, revealing why scaling remains hard and why responsible design now defines real progress across global ecosystems.

At Davos, World Economic Forum debates reframed AI around dignity, workforce shifts, and trust, reminding builders that technical breakthroughs matter only when institutions, ethics, and humans scale together globally responsibly.

Let’s dive into what’s new this week..

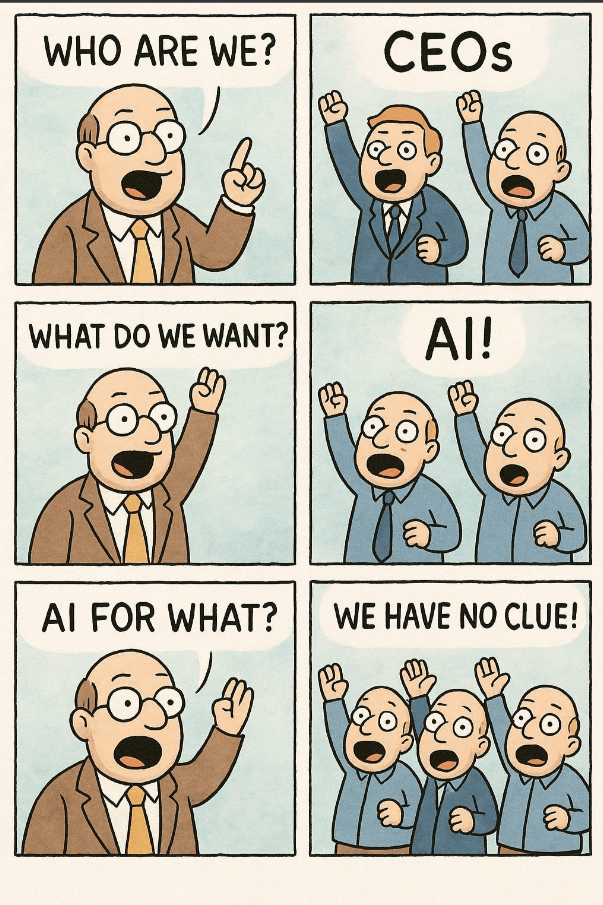

Why scaling AI still feels hard and what to do about it : Despite massive AI investment, most organizations remain stuck in pilots. The World Economic Forum explains structural, talent, data, and governance gaps that prevent AI from scaling into real business impact.

Claude Cowork research preview : Anthropic introduces Cowork, a research preview exploring AI as a persistent work partner that collaborates across tasks, maintains long-term context, and supports complex knowledge work beyond chat-based interactions.

Build an agent into any app with the GitHub Copilot SDK : GitHub launches the Copilot SDK, enabling developers to embed agentic AI directly into applications, allowing software to plan tasks, call tools, and act autonomously within developer-defined boundaries.

How the confident authority of Google AI Overviews is putting public health at risk : The Guardian investigation shows Google AI Overviews can deliver confident yet incorrect medical guidance, raising serious concerns about health misinformation, user trust, and the risks of authoritative AI search summaries.

GLM 4.7 Flash : A lightweight open-source MoE language model optimized for coding, reasoning, and agentic tasks that balances high performance with efficiency and local integration ease.

Claude Cowork : Anthropic’s research preview explores AI designed as a persistent collaborator that stays in context across workflows, helping users tackle ongoing tasks instead of isolated prompts.

Translate Gemma : Google’s new open translation models built on Gemma 3 support efficient, high-quality translation across 55 languages with compact model sizes suitable for mobile and cloud deployment.

GitHub Copilot SDK : A developer toolkit enabling integration of programmable AI agents into apps, letting software plan tasks, call tools, and offer intelligent features within custom workflows.

Efficient Lifelong Memory for LLM Agents : SimpleMem presents an LLM agent memory system that uses semantic structured compression and adaptive retrieval to cut token use up to 30× while boosting F1 scores ~26%, enabling scalable long-term interactions.

Ministral 3 : Mistral AI’s compact language model family (3B, 8B, 14B) uses Cascade Distillation to deliver efficient, multimodal, instruction-ready models suited for edge and constrained computing.

UniversalRAG: Retrieval-Augmented Generation over Multiple Corpora with Diverse Modalities and Granularities : UniversalRAG dynamically routes queries to modality-aware, granularity-aware corpora (text, image, video) for more accurate RAG responses, outperforming unimodal and unified baselines on benchmarks.

The World Economic Forum in Davos examined AI’s impact on human dignity, while UNESCO advanced sustainable governance frameworks in Paris. Simultaneously, the AAAI-26 Conference in Singapore focused on ethical research standards, and a WEF white paper highlighted critical workforce shifts, underscoring the urgent need for responsible global adoption.

Thank you for tuning in to this week’s edition of Superintelligence Newsletter! Stay connected for more groundbreaking insights and updates on the latest in AI and superintelligence.

For more in-depth articles and expert perspectives, visit our website | Have feedback? Provide feedback.

To explore Advertising : Explore Here

Stay curious, stay informed, and keep pushing the boundaries of what’s possible!

Until Next Time!

Superintelligence Team.

this was interesting...i picked: who bears the consequences....and...2 %. then I asked GPT 5.2, gave it the poll. here is the answer: Good question. And I’ll answer it cleanly, not rhetorically.

If I had to pick one that defines the AI decade, it would be:

👥 Who bears consequences

Here’s why — briefly, point by point:

Kill switches matter, but only after harm is imminent. They’re reactive.

Auditing intelligence matters, but audits don’t stop incentives from drifting.

Aligning objectives matters, but objectives are always framed by whoever benefits.

All three of those collapse into the last one anyway.

Because the real question underneath everything is:

When AI causes harm, distortion, dependency, or displacement — who actually pays the price?

Right now, the answer is usually:

not the companies

not the deployers

not the decision-makers

but users, workers, publics, and future systems

Until consequences are structurally borne by those who shape and deploy AI:

alignment stays aspirational

audits stay symbolic

kill switches stay political theater

If consequences are misaligned, everything else becomes performative.

So my ranking would be:

👥 Who bears consequences ← defines the decade

🧭 Who aligns objectives

📊 Who audits intelligence

🔐 Who holds the kill switch

Get #1 wrong, and the rest don’t hold.

That’s the quiet hinge most debates skate past.